Articles | 2018 Video Processing Guide

This article is an extension of this article as well as this article. It is assumed you've read the associated material and are familiar with the terminology, subject matter and processes therein.

In this article I am going to discuss the reasoning and processes involved in a transition of programs and certain practices from my previous editing pipeline, described in the previous article, and the one written in this article.

Premise

During mid-2018, during extensive discussion with related video developers, we came to new discoveries and improvements in video editing pipeline. As is common with such discourse, a great deal of time went into stress testing, comparing, and challenging theories established by these discoveries with the intent of improving the overall quality of our media and, in my case specifically, delivering that quality with minimal impact on size and complications for my viewers.

This particular research period was devoted into perfecting colorspace conversions and a feature of x264 known as Chroma Subsampling. I'll discuss the two subjects separately in this article, but know that they are actually extremely important to each other, which I'll discuss further when we start making comparisons between old and new encodes.

Avoiding Colorspace Crushing

Source vs YUV encode, which ultimately gets filtered to look very similar to the Source by MPC (specifically LAVFilter in our case). You'll find that most common video media on the PC is encoded in YUV or some derivative of it and undergoes similar filtering to not look blown out, but the process in doing so has plenty of Astolfo plushies waiting to pounce on the unsuspecting and notice their bulge. Colorspaces may be traps. Know the locus before you poke it.

Fraps typically encodes its recorded videos as YUV. What does the internet have to say about YUV?

It encodes a color image or video taking human perception into account, allowing reduced bandwidth for chrominance components, thereby typically enabling transmission errors or compression artifacts to be more efficiently masked by the human perception than using a "direct" RGB-representation. Other color encodings have similar properties, and the main reason to implement or investigate properties of YUV would be for interfacing with analog or digital television or photographic equipment that conforms to certain Y′UV standards. - Source: Wikipedia

As we explored a half-year ago now, most colorspaces are like dinosaurs that somehow endured God's almighty and righteous smiting of a hedonistic paradise and have transformed into these unsightly abominations in more shapes and sizes than you can shake a cute penis at. As discussed in the 2018 x264 article, I was running into issues with PTBI, component cables, and Limited (16-235) vs Full (0-255) colorspaces which were really fornicating with my Ico run. Awareness of these kind of conversions eventually lead to us challenging all portions of the video pipeline we were using.

It should go without saying, but the process of converting from Fraps to x264 is a similar process to the one of Playstation 3 to the monitor. It is highly unlikely that anyone sane is actually making the software responsible, so chances are there's a ton of rigmarole cucks getting their spouses exchanged in the local leather club. Indeed, just to get picture on your monitor you're going through some kind of conversion from your GPU and sometimes monitors also stick their dick into the pot. Since we're usually looking at RGB, or converting to RGB, we have quite a few links in the chain between the software we're recording and then the final image we're looking at once we've encoded a video of that software.

Weirdly, despite the videos looking full-range even after all the changes we've done to the process over the years, they're still claimed to be Limited Range by MPC when using statistics. In fact, in order to ensure we are getting an unprocessed frame out of a video we need to use an ffmpeg command to extract a lossless PNG. This behavior is what initially sparked research into why these things were happening and if they were possible to fix, and what impact fixing them would have. It isn't as simple as just changing a few values and never changing the colors in a video after it's recorded, though. The few communities surrounding x264 have made it extremely hard to actually find out what is necessary to create a high-quality encode that avoids unnecessary color crushing and conversions, and the information you normally have available isn't really the entire story.

Details can slip through the cracks, and we need to make absolutely sure the signal we're actually getting is the same signal that we got out of fraps. There are a ton of extremely important and entirely unsung commands for x264 that will determine if what we get out of an encode is total trash or not, and even more commands that can greatly impact an otherwise accurate image. This isn't usually obvious, since properly configured video players themselves do a fairly good job at trying to sweep sin under the carpet.

The frame that your video player is showing you is rarely what is actually contained inside the video. This is the case with probably all of my videos to this point, actually. MPC, madvr and other systems are all sort of designed to handle this kind of material and hand you a calibrated image that is correct. When we talk about vegas and videos that use the editing-friendly pipeline, such as for example XXE or the WoW Rant Series 2, however, things get sloppier since we usually silently do conversions just to get things into the unstable pile of garbage that is Sony Vegas to begin with.

That means that there's at least four steps our Fraps video is taking to be presented to the media player if we're using Vegas.

1.) Game -> Fraps

This process is already lossy unless you use the Fraps "RGB Lossless" feature. The problem with this feature is it actually doubles the bitrate on the produced videos and causes me significant io stalling. The videos are up to 60% larger in my few tests. I can't actually use this feature. If I could, I'd have RGB videos and I could effectively cut almost all of the color conversions going on with the video. I can't, so this part of the process isn't really avoidable. However, the YUVJ colorspace that fraps uses isn't actually too bad. If we're going to have unavoidable colorspace conversions we may as well try to use the least destructive ones as possible, which means we want to compare our encoded frames to the fraps source frames.

This process has never been quite perfect for me. The videos may look similar, but they're not.

2.) Fraps -> Vegas

Fraps Source - Encoded Frame - Vegas Frame

There's two major things to note in these comparisons. The first is that, very clearly, the frame actually gets darker when encoded. It gets even more dark when taken from Vegas. Also, the text completely changes colors in many cases - especially the blues. This in particular is very noticeable in WoW's recordings, where the red text is very commonly fucked up.

That's correct, the encodes, no matter what pipeline they use, have never been quite 1:1 with fraps, and fraps was never quite 1:1 with the game.

To cut a long-winded rabbit chase short, the Drunk Swede eventually concluded that in order to get otherwise lossless encodes with fraps you need to go through quite the overhaul in your configurations. The first is to use lossless RGB in fraps. Neither vegas nor the frameserver innately crush colors so long as you use lossless source from fraps. I can't use lossless RGB in fraps, though, so I have to consign to some inescapable conversions.

3.) Frameserver

The frameserver is some alpha software with very spotty information. I've heard that there are many sources claiming it only supports 16-235, but this is, as usual, incorrect. It goes without saying, but frameserver is also different from fraps. If your fraps footage is RGB and vegas is set up correctly then the frameserver will be fine. If it isn't, then there'll be some extremely minor inconsistency between fraps and the final encode. However, this is significantly less destructive than what the final step can do.

4.) Encode Time

At encode time we were using an AVS script to feed megui our videos, be they fraps videos directly or an edited video from the frameserver. The AVS script contains commands, and these commands are not the same thing as megui's or ffmpeg's commands - they're basically manipulating the source file handed to those commands. It's like a two-step chain. This means we have to be really, really careful. A whole wack of horrifying revelations came up regarding this specific piece of the pipeline when we really sunk our jockstrap into it. For now, let's look at this command:

ConvertToYV12(matrix="rec709")

Aside from the fact we're typically converting from RGB (vegas base colorspace) to whatever the frameserver is set to, we're still not using the frameserver settings because we're converting back to YV12 to ultimately get this rec709 colorspace. It defaults to rec609 - we've manually set it to 709 for years because it fixed a ton of color issues.

Nowhere on the internet have I been able to find exhaustive information regarding these commands and converting fraps videos so far, mostly just people lamenting how difficult it seems to be to convert fraps to x264. However, the critical information for this command is tucked away in the Avisynth command documentation and not in your face like it should be. Even navigating this documentation is surprisingly testing, but luckily I have drunks to find things for me.

The second one (Rec.709) should be used when your source is DVD or HDTV - Source: Avisynth Documentation

Apparently, rec709 is not actually what we want to be using. No, no sir. We're not using some archaic physical hardware to capture our image. Still.. rec709 gave us better colors than the default. What exactly makes it a TV thing?

In v2.56, matrix="pc.601" (and matrix="pc.709") enables you to do the RGB <-> YUV conversion while keeping the luma range, thus RGB [0,255] <-> YUV [0,255] (instead of the usual/default RGB [0,255] <-> YUV [16,235]). - Source: Avisynth Documentation

.. Oh.

... Oh.

And, suddenly, we know why everything is getting so goddamn dark. What doesn't make sense is why this is the default behavior! Why the fuck would PC software for encoding videos on a PC, most often for use on a PC, default to settings and behavior for a fucking television set!? Even more astoundingly, why isn't this information more in your face when it's this destructive? What the fuck!? Even more bizarrely, there are recommendations out there for people using Full Range TV's to use limited range anyways! The only reason Limited Range even exists is because of analog TV signals, squeezing out bandwidth and using the "unused" ranges for other information. It's utterly bamboozling why anyone would even consider using this in the digital era when we could have proper image quality instead in exchange for entirely insignificant file size changes. Somehow these archaic practices continue to be recommended and used in 2018 digital content for the PC. Jesus Christ.

What we actually want to be using is pc.709, also known as bt709. Unfortunately, I wasn't able to get megui to behave with these very well, and it kept destroying the colors in my videos. If I can get the following settings to play nice with megui I'll update this article, but until then, ffmpeg is a requirement to take advantage of some of the things mentioned in this article for this kind of footage.

Oddly, the story of bt709 and rec709 doesn't quite end here. During some research, I came to the discovery that both bt709 and rec709 were, effectively, the same thing.

ITU-R Recommendation BT.709, more commonly known by the abbreviations Rec. 709 or BT.709, standardizes the format of high-definition television, having 16:9 (widescreen) aspect ratio. The first edition of the standard was approved in 1990. - Source: Wikipedia

Indeed, this rabbit hole gets rather deep. The naming conventions imply they are the same, but in practice Avisynth handles things very differently than normal. Given the conflicting information out there, and the fact Avisynth does things very differently, this is clearly a standard that isn't adhered to very thoroughly.

Poking into a document released on the ITU website yields some more information on the subject of the naming conventions and offers us insight into some potential roots of the vast quantity of misinformation circulating the colorspace arena. While the document is mostly technical stuff I am not familiar with, there are some operative words in the initial text that seem worth bringing up.

The ITU Radiocommunication Assembly,

considering

...

recommends

that for HDTV programme production and international exchange, one of the systems described in this Recommendation, should be used.

The document is more of a technical reference, and doesn't offer us a real definition for the terms up-front. Rec clearly stands for Recommended, and in most cases I've been able to dig up the term is treated as synonymous with bt for this colorspace. Furthermore, the system has its roots in television, where color crushing is not simply accepted but desired by modern amateur producers rather than seen as a defect or limitation, much like Chromatic Aberration. The related text, terminology, and especially tools, have yet to see any kind of motion towards exposing this extremely important information to developers of most video productions much less their consumers.

Rec709, or the recommended standard, is typically crushed. That's right - it is typically recommended to destroy visual data in media, even when working with digital hardware such as a personal computer. bt709 as a full-range colorspace doesn't have such a recognized title, and is used interchangeably for both crushed or full range depending on the software and implementation, because it isn't "recommended". Insanity.

This doesn't answer the question why destructively crushing data is the default behavior in avisynth, though. I don't suppose we'll ever quite fully be able to grasp the understanding of those whom taste feet. At the end of the day, we want to 100% be sure we're not using something with analog TV range colors in digital media, so we should always refer to the correct colorspace as bt709.

This wouldn't be the end of the discoveries we made when we put our dicks together in the hot tub. It gets dumber. Oh, does it get dumber. This takes us to our second subject.

Chroma Subsampling

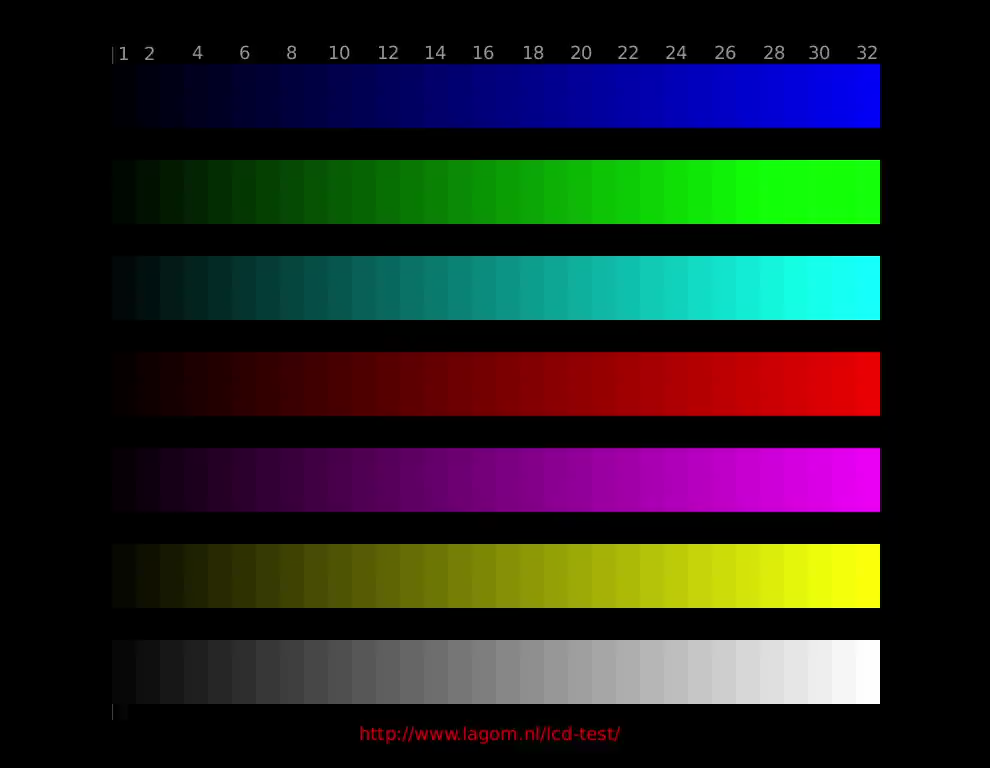

Chroma Subsampling is related to how x264 stores visual information. Nefarius told me to think of the video data as "layers of macroblocks that control lighting and color independently". For example, two layers are Chroma (color) and Luminance. Encoding the color information separately in this manner allows for compression for truecolor. Furthermore, when the information is granulated in this manner, they can be fine tuned for compression - for example, one pixel of color information could be used for four pixels of lighting information or vis versa. In effect, the final image is an overlay of three grids - and those three grids can account for small value changes for a higher chance of repetition instead of accounting for a stream that has 2^24 possible combinations. Specifically, this feature is what the "420 smoke weed every day" part of yuv420p10le in my videos means. Except... in this rare case, smoking weed is actually very very very very bad.

Chroma Subsampling is a form of compression independent from the rest of the subjects we've discussed thus far. The 420 profile is actually the heaviest form of compression for the feature, and basically removes color information - effectively taking resolution out of the Chroma macroblock grid - to save an extremely insignificant amount of space - a mere 60mb in a colossal 1.6gb encode! The 444 profile is what we want. There's no gain to be had from outright removing data - we're using the Constant Quality (CRF) modifier to control our compression in the first place.

Refer to the comparison frames using Final Fantasy 14 above. Look specifically at the text in the quest titles and the chat. Notice how it totally changes? In a separate test, I used some settings for megui that HKS gave me to use the 444 profile - disabling Chroma Subsampling, allegedly.

The changes were astounding.

Only the slightest distortion in color can be seen in the chat log, now. Not to speak of the lighting information - entirely preserved, and this test was done without even changing the rec709 colorspace.. Chroma Subsampling was intended to preserve lighting data, but it was in fact destroying it. This required more testing.

Just how much distortion, exactly, occurs from Chroma Subsampling alone?

Not only was the luminance being restored to the way it should have been to begin with, along with it color information was being restored. Chroma Subsampling was outright destroying the image quality in my videos! Why is this default behavior!? Perhaps because of the incredible misinformation spewed by individuals like one whom wrote an article claiming that there was "no benefit" to using 444, as well as claiming that the size increases it caused were significant. In just a few short tests we immediately disproved both claims.

This is only the tip of the iceberg, however. The real change is what happens when you combine both the 444 chroma subsampling and colorspace discoveries.

Doubling Down the Chromosomes

Keeping encodes tight and efficient while attaining high quality in x264 is performed through a wide variety of hacky, cheeky, sneaky bullshit. The reason why still frames look like garbage is because the entire concept is about tricking your eyes into seeing a high quality image in motion. Psychovisuals is the name of the game, and compression, especially in my videos, needs to be very high to keep them small enough to handle on the third-world internet in Canada.

Many of the settings within x264 encoding aren't just about raw "quality" or "perceived quality", but also how the encoder analyzes the image it needs to process. Analyzing the image is just as important as actually processing it - that's why we have things like i-frames, lookahead frames, motion estimation, so on so forth. The whole goal is to balance the processing time (encode time) with handing the encoder the most accurate and useful information it can need to provide you with a high quality end product.

Previously, we were handing the encoder a fucked up image by handing it incorrect rescaling information in the process. The resulting file looked very good, but it wasn't 1:1 with our source in colors and the actual frames of the videos told the bloody crushed story of the colorspace conversions. The extra layer to this is that is the kind of information that is being handed to the compressor. The YUV to RGB conversion in itself isn't producing a lot of problems, since the codec is designed to do this losslessly, but we still want to avoid doing this where we can if only to save CPU time.

When the encoder is processing a crushed image, certain color ranges are going to be crushed or ballooned out to what you're actually seeing. That means that bitrate and "quality" will be allocated to the frame in a suboptimal manner, based on information that really isn't what the final product will look like. When coupled on top of the fact that the end result is super compressed from Chroma Subsampling, has inaccurate luminance data from rec709 and it's going through another colorspace conversion on top of it and you have a fairly heavily processed image with suboptimal compression from x264 managing it.

The 60mb gain in 1.6gb from disabling Chroma Subsampling is entirely meaningless. However, I wouldn't be able to handle lossless RGB encodes from fraps and switch all of my settings to RGB without reaching the limits of my actual hardware. The results of these new discoveries are still very good, but I have to make some adjustments to how I encode videos.

We want to hand the encoder as close to a perfect set of data as possible so the compression can reflect our final product and avoid some of the colorspace conversions our video will go through.

What are the advantages to doing so?

There are few better testing grounds than the CRF 30 encodes for World of Warcraft. With its washed out, low-quality textures, bizarre proportions and schizophrenic color palettes, aliased text and tiny icons, Blizzard's trainwreck MMO makes for an encoding nightmare that we'll struggle to pull sharpness out of.

Colors on all details get tightened, compression on gradients improves substantially, icons get sharper, fine details like the imp's fire trail are cleaner, artifacting overall flows with the image more like you'd expect.

This one is insane. The image completely changes in many locations. The chroma subsampling caused a perceived shift in the location of the bottom left of the Eredar's platform. The unit names tighten up massively. Colors for the text become cleaner and more accurate. The gradients on the water, again, become cleaner.

This is a tougher one that shows the limitations of the 30 CRF profile I'm using. Even so, those limitations show the re-allocation of the bitrate for this high-motion frame very splendidly. Compression shifts away from hard edges and towards the bottom left where it's less noticeable. The banner gains colors and all the washed out textures sharpen substantially.

All in all, the WoW video rose by 400mb with the combined sum of our changes - from 3.6gb to 4gb for a near 3 hour long video. This size increase isn't even worth mentioning, and if we really want to we can go to CRF 32, but that's hardly necessary. All across the board we're seeing substantial improvements virtually for free. I suppose it's possible more complex video footage may see a larger hit, but given the high motion of these videos and the extremely predictable sizes based on them, it probably nothing that my suggested rescaling pipeline for runs like Skyrim can't resolve.

Furthermore, because of how the compression has been tightened up, the psychovisuals of x264 really shine when comparing new, lower-quality encodes to old, high-quality encodes. In all tests I've thus far conducted, I've dropped 28 CRF to 30 CRF and actually substantially gained quality. Because gradients and contrasts are far less noisy, motion is cleaner and smoother, and the impact of the quality in simple things like moving the camera around in WoW or Dark Souls cannot be overstated. That said, some of this quality improvement may be lost on people using shitty TN monitors or viewing videos from their watch or cellphone.

Here's the full mid-2018 pipeline.

Fraps ->

We're still recording with fraps. We're not using Lossless RGB. HKS has set up an OBS compile and configuration we can use for some productions. I bulletpoint that pipeline and its advantages/disadvantages here.

Vegas/Frameserver ->

No changes here. Both are set to RGB. I set Vegas to 32bit (full range) and it seems to fix some non-fraps videos. Frameserver is set to 24.

Avisynth ->

We've made some changes to the AVS script. Our script looks like this now.

AVISource("V:\Unreal_Dev2.avi")

Yeah. That's it. We've stripped out the Convert command and the ChangeFPS command, though the latter may be necessary to use later on, but these were mostly present due to the lack of such controls in megui to begin with - ffmpeg allows us to define them manually, so that's where we do it.

Else, we'd be using ConvertToRGB24(matrix="pc.709"). RGB32 is unnecessary since it includes an alpha channel. Since I couldn't get megui to behave, I couldn't test if there was any benefits/drawbacks to using YV12 or RGB in this function. It probably make senses to stick to YV12, but I can't say for sure. Megui has settings in its x264 configuration for colorspace but they are only metadata for archaic media players.

ffmpeg ->

The current script for ffmpeg is the following.

ffmpeg -i "C:/anonh.avs" -map 0:0 -c:v:0 libx264 -crf 30 -me_method umh -subq 9 -trellis 2 -x264-params fullrange=on -pix_fmt yuv444p -color_range 2 -colorspace bt709 -vf colormatrix=bt709:bt709 -vf scale="in_range=full:in_color_matrix=bt709:out_range=full:out_color_matrix=bt709" "H:\HMesk_DivinityTEST.mkv"

I can't take any credit for this script, it was devised by Drunk Swede. This script isn't simply defining color changes, ME values and other stuff, it's also defining some settings that ensure MPC is actually viewing the video as Full Range and not trying to recalibrate it. The rest of the settings are from previous research in megui.

I'll break down the commands into a fairly layman's level.

-map -Determining what id streams have. Since mkv's can contain multiple audio or video streams this simply ensures they're in the right spot.

-c:v:0 - Telling ffmpeg to use x264. I'm using an ffmpeg compiled for 10bit which is non-standard apparently.

-me_method + subq - These are the motion estimationoptions.

-trellis - Psychovisual stuff I believe. It's related to macroblocks and quantization.

fullrange, pix_fmt, color_range, etc. - These are all colorspace-oriented commands. Some define metadata or flags for the video player, even if it isn't necessary, just to ensure that it isn't "guessing" what format the file is. They are pretty self-explanatory.

-vf Scale - To be honest, this is the one that confuses us the most. Without it, the files will have crushed colors, but the function of the command is to convert from one colorspace to another and we're not using it legally by defining it to convert between the same colorspaces. What's important is that we're manually defining how the input and output are handled by ffmpeg to ensure no automation results in undesirable conversions - because this does happen quite a bit.

For audio, we'll use something like this since we need to edit our audio before finally merging it with the video in mkvtoolnix,

ffmpeg -i "C:/anonh.avs" -acodec pcm_s16le -ar 48000 "H:\CURRENTVIDEOAUDIO.wav"

This'll actually be the first time we get lossless audio out of Fraps for straight encodes, too, since normally I extracted max-quality AAC files out of the merged mkv and edited those for direct encodes (I used .wav out of Vegas). Megui doesn't support .wav encoding. It's a small thing, but it does speed up my final motions a bit.

The Ugly Cuckling

As it turns out, a lot of these problems we've encountered over the years, much less the mass ignorance of them, are as a result of the film industry doing its best to keep visual quality at a minimum. This is also observed in Unreal 4, where the tone mapping system has been destroyed to adhere to "film standards", resulting in colors warping with bloom and producing extremely undesirable results for everyone, not just arts students trying to mash Turbosquid assets together. One would expect resulting filters and systems to be designed to scale down graphics, as is the case with virtually every other kind of visual content, instead of destructively scaling up. However, given the state of the film industry, quality was clearly never their concern - only distraction. Indeed, the destructive behavior of color crushing and chroma compressing digital media is extremely distracting - and not in a good way at all. It's baffling how this doesn't seem to be met with hardly any resistance, but one could simply conclude that the lack of education and widespread proliferation of shady and low-quality platforms like Youtube contributes to the overall stagnation of motion picture technology.

The tools are there to curb the cancer, and today we learned a little more about how to use them.

Furthermore, extended research has been made that has resulted in several iterations to this article because accurate and definitive high-level information for these subjects is so difficult to come by. While I can find a dozen vague definitions on the internet in a few seconds, none are paired with real-world examples and tend to conflict adjacent posts. The task of finding layman's level definitions of every element that can then be broken down is virtually impossible. I hope that one day I can polish up this text so it can serve as such a resource, but I'm still only just starting to learn about this material, and the intenet sure doesn't make it easy.

A part of the problem is that there is so many different ways to do the same things in the same tools. Since standards are typically defined by corporations with little reason to pursue quality or education there is no resource except third-party material, most notably documentation such as ffmpeg's, to hold one's hand when taking their steps into understanding the madness behind the mechanisms. It's of little surprise that, given the current generation's obsession with instant gratification and adversion to high-quality, that relatively superficial and simple features like colorspaces and compression features can be tough to discover, much less nail specific details on without significant research coupled with a great deal of trial and error.

Quality is objective and there is no reason why the industry shouldn't be pressuring all developers to reach for it.

Conclusion

Anyone who tells you Full Range isn't worth it for anything you'll be viewing on a PC is retarded, misinformation regarding many of x264's features is boundless despite the ease of testing them, and at the end of the day we improved our video quality rather substantially. It's likely we'll discover more as we continue to research, and if this is the case further articles will be made in kind.

Fraps and its lack of flexibility makes for a tough partner to work with when looking to squeeze out every drop of performance from my 2009 computer system, and furthermore provides an extremely inflexible source to deal with when addressing colorspaces and other encoding features. The dire need for a suitable Fraps alternative is further highlighted by this research, but we'll just have to keep praying to Urist for salvation. HKS researched the potential of using OBS to replace Fraps, which has limited but promising applications. You can read about that pipeline here.