Articles | Tales of Production Tartarus (2018-2020)

Herein lays legacies retold from legends handed down over the course of my video productions. May they give insight into the utter hell I've had to endure trying to produce motion picture media. The legends are divided by Production.

My Voice Belongs to IGN

Back when I still released on Youtube I was sexually assaulted by a variety of scummy firms or opportunistic shadow companies looking to make a quick buck off of my videos with ad revenue. The general tactic is to scare people into believing they genuinely have copyrighted content in their video so they wouldn't contest, but the system back in 2010 still put the burden of proof on the victim and not the plaintiff. Thankfully, I was still able to contest the disturbingly large amount of false copyright complaints from many Korean and Russian groups, but most notably above all IGN. IGN repeatedly put claims on my Starcraft 2: Wangs of Liberty videos and, of course, there was never any kind of necessity to establish what exactly it was they were claiming. Since IGN holds no copyright over Blizzard content it was clear they could only try to copyright the actual commentary - my voice. I didn't make money myself, and I sure wasn't going to allow these corporations to make money either, so I contested every single false claim. It did get tiring, though, since I was usually met with a half dozen or more every week during sc2's short-lived prime time and continued to get false claims all the way until I finally deleted the channel. Really, there should be consequences for these false claims, especially from trolls like IGN. I think deleting the offender's channel and banning all related IP's as well as filing a legal claim for attempted fraud would be a good start.

Nowadays the copyright system is even more broken, with third parties randomly being given absolute power over the network and flag brigading being commonly used to censor competing channels or color commentators reddit gets butthurt by, a practice that isn't simply endorsed by google but celebrated.

Furthermore, when my channel broke 1 mill views I began to encounter an all-new trolling problem: Partnership bots. Turns out, 1 million is a breakpoint that most bots begin to flood your inbox with partnership requests. I guess it's sort of expected anyone that popular would be a big moneymaker. Curse, some machinima group, and others all wanted a taste of my dick. After I read that they required access to your account and their legal TOS required me to remove 90% of my content or police what I say I blocked every single one that showed up without hesitation.

This isn't speaking of the day-to-day frustration. Most of the sc2 videos - many split into 10-15 minute parts due to youtube's arbitrary limitation that would later require a fucking phone number to disable - required 3-4 attempts at uploading for it to successfully go up. Even then it wasn't guaranteed; many uploads failed to actually process, and those that did succeed wouldn't show 1080p for up to days at a time. I also discovered that in addition to destroying the quality, youtube's alleged "original" sizes were over 40% larger than my own. Tacked with the nonstop complaints about buffering and rampant censorship in Germany and Canada and I couldn't force myself to deal with the braindead user interface to serve a fraction of my potential viewers with only mediocre release quality any further.

The trip didn't end with Youtube, though. Just now I was in my own arena - and I was more than willing to oblige the need for insanity and incompetence.

Early Console LP's (Viking, Killzone 2/3, Dante's Inferno, etc)

I was recommended the Blackmagic Intensity Pro capture card by Totalbiscuit when I inquired about hardware on Teamliquid back in 2010-2011 or so. As far as the hardware is concerned I couldn't really have asked for more besides built-in HDCP stripping. Software-wise, though, I was in for a wacky fucky ride.

Early console games were recorded on Blackmagic Media Express, an extremely limited monitoring software that came with the capture card. Finnicky at the best of times, Media Express didn't allow me to fullscreen the video and its preview wasn't native resolution. Thus, I played all those early titles in this precise window shown here - crushed and pixelated. Being unable to spot red eyes in Killzone or details in God of War was hardly the worst of my troubles with this setup, though.

While Media Express was relatively functional as a video recorder it didn't support audio with my configuration and I'm not sure if it even supported realtime preview of audio at all back then. Either way, I was stuck with a worst-case scenario: I needed to record the audio separately.

Anyone who's ever dealt with video knows how much of a nightmare recording audio separately can be. There's virtually no software on the market that can reliably record several hours of audio and not have some kind of sync issue. I needed to record multiple tracks. I tried several software, and this was my experience.

Audition 1.5 - Dropped pieces of audio every 2-5 minutes and drifted out of sync (Killzone 2 + Viking at the least). For Killzone I was able to manually resync it so there was only an echo. With Viking, I manually resynced the audio every 2-3 minutes for the entire video using Sony Vegas to preview the video at 3-4fps because of how slow the videos were to preview.

Audition 3.0 - Desynced both audio streams from each other + randomly stopped recording entirely with no error reporting.

Audition 5.5 - Same as 3.0.

ProTools - Used 99% cpu at the driver level when the program wasn't trying to run, claimed my Asus Xonar 2 didn't support ASIO (feature bulletpoint) and refused to operate. Trash; uninstalled immediately.

Vegas - Randomly stopped recording or crashed, but rarely, and didn't desync (used for God of War). The big exception was the GoW2 end segment that somehow ended up with two entirely desynced audio tracks.

Nothing else supported multitrack recording in my toolset at the time or flat out couldn't access my devices.

Eventually I was recommended to try Virtualdub (or tried it on my own, I can't recall) and discovered that Virtualdub could actually hook the capture card. This produced a window I could record with Fraps, and at the native resolution of the game. The caveat was that I needed to flip "Enable Post Process Chain" on and off to get the program to hook the card, and it wasn't reliable. I usually needed to flip the option several dozen times to get it to hook, but sometimes it could take hundreds of attempts. I actually could be sitting there going through the same dropdown to turn this off and on for up to half an hour or more just to see any video.

Some years later, a program made specifically for frapsing video from this capture card, ptbi, was functional enough that it could be run on my computer and not immediately crash. It was then I discovered that Virtualdub's input latency was somewhere anywhere from 1/4 of a second to 1/2 or more, which had been a considerable handicap in every single one of my LP's up to that point.

It wasn't until around DMC3 I believe that ptbi entered the pipeline and finally eradicated the dysfunctional half-solutions I had been using for years. Fraps, however, continued to produce inaccurate video color all the way until 2018 when we learned about Chroma Subsampling as explained here. To this day I still can't fullscreen console games while recording because it will change the video resolution.

Fraps Hell

Fraps is a very functional, very performance-conscious program - when compared to the other solutions available for recording video in 2018 and certainly by no objective margin are any of the mainstream video recording applications capable of withstanding even the slightest QA scrutiny. OBS requires source code modification to be competitive, and no other software exists that even remotely competes with Fraps should you have a secondary hard disk to handle it.

Other than its extremely inefficient IO load, Fraps' main caveat is the fact it never had received developer attention from someone who actually used the application, much less developer attention at all since it was first released. Therefore, basic features like multitrack audio recording simply didn't exist. Fraps never once desynced my audio except in extreme circumstances, but it required a precise and careful dance to ensure you were recording audio that was balanced volume-wise since you'd be receiving a single stream of both game and voice audio.

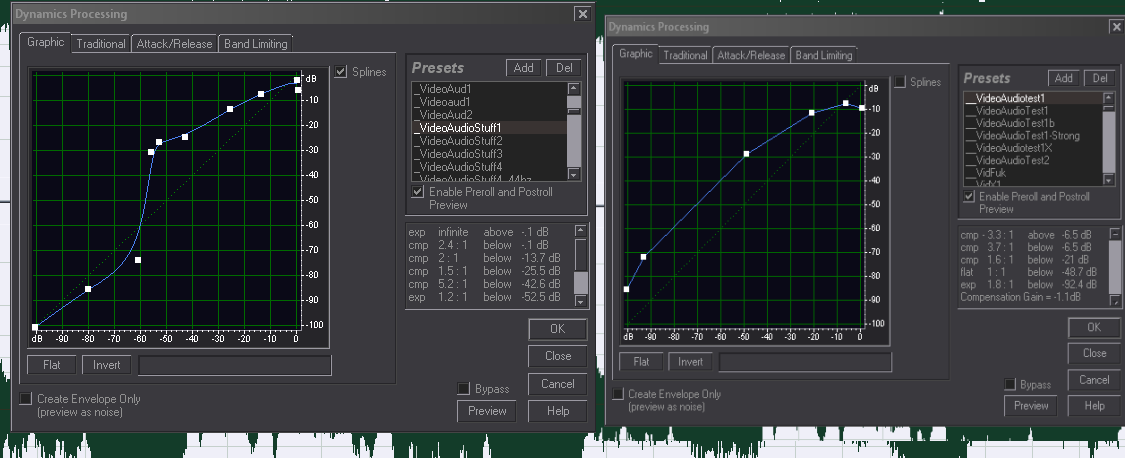

Although the end result and desire was always the same - having a balanced final product that kept my low lows and high highs controlled while coloring the game audio as little as possible - my methods and approaches changed over the years with extensive testing and thousands of hours of production experience racking up. No solution was ever perfect, and every single one of them had oodles of horrorific brain damaging challenges that lead to their development.

Ghetto Blaster Setup (WoL, Earthbound, etc.)

My early recordings were run with a $20 mac microphone I placed between the speakers and myself so I could record the game audio from the speakers in my earliest recordings. When I updated to Windows 7 and developed a "proper" recording environment (recording both the game and mic at once) I didn't have the money to afford headphones - so I played with speakers while recording game audio, but tried to keep the volume as low as possible. This wasn't always successful (See: Earthbound). Truly lived the Youtube dream for quite a while.

Compressors have helped me tame the wacky world of audio in videos where virtually no other LP'er has even tried to travel. While my extensive modding experience prepared me to step into the world of processing audio for videos, the merged audio from Fraps presented an eternal challenge in tuning and perfecting my setup - solutions that constantly were tested by changes in hardware, environment, and my pursuit for perfection.

Compressors

My first setup of Youtube videos used a compressor specifically designed to smash a specific frequency range for my voice acting. It was the most aggressive and heavy compressor I'd ever use in a video production.

Despite the morbidly aggressive compressor and extremely shitty microphone, the setup produced higher quality audio than nearly every commercial comparison in 2018, as most firms use far more aggressive compression than even this. (see: Blizzard DoTA official youtube videos). The biggest issue with this setup was a lack of understanding how Attack and Release timings worked, and that the curve produced bouncy results with game audio. Without any external knowledge to find on the subject I was entirely left to self-discovery. Eventually, I produced a string of compressor settings targeted towards my video productions rather than voice acting, as in 2011 I had effectively ceased the latter and could focus my audio time into improving the quality of my video work.

Over time I found solutions that worked broadly as a one-glove-fits-all by targeting specific ranges in my voice typically occupied, with an emphasis on flattening peaks and boosting low range. Especially since my initial recordings had an elevated voice presence that I gradually toned back or lost due to depression so did the aggressiveness of the profiles also decrease.

With very little actual experience tweaking these settings and no assistance to go from, it took a disgustingly long time to really understand how to maximize the effects of this setup. This was particularly tested when my Yeti died and I received an AT2020 from Lavarinth, a series of events that catapulted me into a much more complex solution than I had been using previously.

Crushing Sibilants

As explained in an Educational video regarding microphones, the AT2020 produced more pronounced Sibilants than the Yeti. This was around the same time I began conversing extensively with a drunken swedish man in audio-related subjects who was the only person I had yet to meet with similar experience to my own - and moreso in some cases. He introduced me to the concept of multiband compression and using it to tackle the sibilant issue.

Unfortunately, I couldn't devise a solution with the software he recommended - Izotope Ozone - alone. My tests all yielded that every single compressor I attempted to use besides Audition 1.5's default produced crackling on clipped peaks, and Izotope was no exception. Furthermore, I wasn't able to achieve satisfactory results with Izotope's tools in rebalancing the low and mid ranges like my existing compressors had been doing.

The first production yielding the new microphone was Dragon's Dogma: Dark Arisen, but the new solutions for my audio in 2016 didn't fully take effect until Dark Souls 3 - midway through 2017 - when my approaches to tackling the troublesome Fraps-mic dance became more heavy-handed. It was then I came across a few small discoveries in manipulating the compressors, and integrating ozone into the toolset was fully fleshed out.

The net result was to use two compressors - a default Audition one which produced the base sample, and then a very light Ozone one which attacked only sibilants. The compressors were both highly dependant on the audio for the game and my voice to be at very specific levels, and with fraps this meant more troublesome learning pains.

Many runs had issues with audio balance, each requiring their own special fork of compression or, in some cases, manual work to properly rebalance. Dark Souls 3 was no exception. It wasn't until the 2018 OBS 444 pipeline that I finally managed to break out of the prison of merged audio. Some runs, like FF14, still required compressors on the game audio because the game itself was so poorly balanced, but I could use a very light compressor on those and my standard setup on my voice.

It took 8 years to reach an acceptable stage in my audio processing where I feel relatively content with the end result.

Noise Control

Runs recorded during summer had to contend with the plethora of very large, metal-bladed industrial fans used to combat the 35-40C high-humidity weather us Canadians are forced to endure. Without first-world solutions like air conditioning at our disposal, recording conditions become a hell of deafening jet-engine like sound and turbulance. Although all of my microphones were condensors, none had major issues with the former, but the latter was a particular issue for the Yeti, whom picked up all vibrations as dull thuds that were boosted considerably by compressors.

Even more perplexing was a repeated, almost rythmic "thump" that could be heard throughout my early productions with the Yeti. One source of thumps was a cable brushing against my chair when I moved, which I did often since anxiety commonly drives me to make repetitive and uncontrollable rocking motions. The other source of the sound, after much hunting, was discovered to actually be heads on external hard disks moving back and forth. The Yeti, even though it was located on an adjacent desk, actually picked up the vibrations of those disk heads moving and this would then get boosted by the compressor much like the fan rumble. The solution to this was to apply a mixture of bubble wrap and foam under the external drives to absorb the vibrations of the moving heads.

In this color photograph of my early setup from ~2012-2013 you can see the external hard disks (behind the coffee bottle) and their padding. The Yeti was placed on the slide-out Keyboard panel when I was seated, which meant it would also pick up horrendous sound from typing on my membrane keyboard much less the mechanical one I acquired later into production. I had to provide the Yeti a separate padding as well.

Initially, I used Noise Reduction profiles to try to fix the fan rumbling in the audio since it often irritated my ears. However, these resulted in washed out audio (See: God of War). It wasn't until I did cleanup work for a Starcraft 2 Machinima developer, whom had provided me voice samples recorded by someone who voice acted with their microphone inside of their throat, that I discovered a very simple and elegant solution to both 90% of pops and fan rumble as they conveniently occupied the same general frequency ranges.

Rather than using a large profile to manipulate the entire waveform, I use a simple FFT to just destroy a very specific range - the lowest range. This happens to include most pops and fan rumbles. The fan problem entirely disappeared when I acquired the AT2020, though, especially since I could just wrap the mic in an eyeglass cleaning cloth which was thin enough to function as a pop shield but stopped all turbulance at a fraction of the real estate. The Yeti, being as large as a dildo, required the much-regaled "Wall of China", a series of boxes with a large metal disk from a fan mount to block both the side of the Yeti and above it as well. Given my limited desk space such solutions were highly unstable and prone to spontaneous collapse.

Everything Is Buggy As Fuck

Until mid-2018, my pipeline of "Edit it in avisynth or Vegas and encode it with Megui then merge it with edited audio in MKVMerge" basically never changed. Only certain ways those steps were handled changed, and usually because everything I dealt with was stupidly buggy and unreliable.

MKVToolnix is one of those tools I've classified under "it works so long as you don't need to do anything with it". I never initially had problems with it, so when strange things like an entire Conan segment having half of its video occupied by a half-frame of grayscale or bluescreens from merging videos showed up I was a little weirded out.

I'm still not sure how a lot of these bugs actually happen, but in all but the most specific cases simply re-muxing the video causes them to disappear. Certain versions are more problematic than others, with the tried and true "don't fix something that isn't broken" motto applying very strongly to the string of updates that created remarkably more bugs in certain timespans.

While I used to merge multiple pieces of videos together in my old recordings, I intentionally stopped doing this in mkvmerge and encoded them together as the final length exclusively because of the vast number of times mkvmerge would break files. Furthermore, mkvmerge would break files only for certain players, very notably VLC. This particular bug is especially prone to happening if for some reason you end up with a warning about different formats in mkvmerge - usually related to audio bitrates in my experience. These warnings appear harmless but almost always result in extremely severe playback issues at the merge frames, and almost always totally break the files for VLC and Chrome. Thankfully no sane person uses VLC or Chrome for viewing videos so I ignore any issues that crop up only for those players.

Aspect Ratios Got Fucked Everywhere

All of my early videos, such as Earthbound, don't seem to actually assume the proper aspect ratio. There's actually no readily available way to automate this process except the "Use Preview DAR" button inside the Preview window in megui. If you don't click that button and leave the preview window open, you'll get the problem my early LP's have - they'll assume the wrong aspect ratio and need to be manually overriden in some configurations. There's other ways to do it, like using an unusual command in aviscript, or configuring things in mkvmerge, but they simply shouldn't be necessary.

Megui is .net

Megui is a .net application and in the right circumstances it's ridiculously unstable and prone to breaking things, which is admirable considering most .net applications don't run properly in any circumstances at all. It also hands you the most useless of errors when it doesn't like something you handed it, especially if it's something out of Vegas (the frameserver can't handle a file >3 hours long and megui will be absolutely useless in figuring out this is why it's failing to load the job). However, for all of its quirks and limitations, megui was the most reliable tool out of the pre-2018 toolset other than one particular update which shadow bricked its own mkvmerge version and proceeded to corrupt every single encode I did with it for a solid two weeks.

HKS reported nonstop issues using the "One Click Encode" feature, but I always did things through the main menu and manually queued them. I figured leaving automation to people who brogram in .net was just asking for trouble. Thankfully, I avoided a lot of potential problems by just keeping my interaction with the program to a minimum, but any time I foolishly thought "updates mean faster and better" I ran a 50/50 chance of breaking things.

The absolute worst behavior of Megui has to be how it handles its profiles. If, for any reason, Megui crashes or is otherwise blown up - e.g. a power failure - you run an extremely high risk of losing all of your profiles. You can't access or do anything to the files while megui is running, which is bizarre enough in itself, but it usually takes the files with it when something goes awry. This is incredibly irritating and alludes to some real braindead chicken scratch behind the fedora.

Daylight Savings Doesn't Save Videos

During recording early Segment 1 of the hot trash heap that was The Ball, daylight savings occurred. This resulted in video files that would crash players, create voids in viewable data skipping or breaking the video stream entirely, or flat out bluescreen your system if viewed in DXVA. A legacy of headscratching and confusion followed this. To this day I'm not sure why, but I presume that because back then - when I was recording in 4gb chunks from fraps - the encoded files produced keyframes with time data that confused the codec. I was forced to actually cut out the section of video with mkvtoolnix and destroy it, because nothing I tried to do over days of manhours fucking around with the file produced something that could fix it. ffmpeg, megui, nothing could fix the busted ass minute or so of video.

That it was capable of actually bluescreening my system was of particular note since this presented some notable security concerns regarding DXVA.

VBR Is HIV, Be Polite And Wave

VBR Ogg is why Dark Souls has sync issues all over the place and is probably a contributor to some sync issues in other old, longer videos. It may be due to the specific ogg plugin in Audition 1.5, but I stopped using ogg entirely after discovering the cause of some of the desyncs in end videos. As a rule of thumb VBR is very finnicky in many formats, with only extremely specific encoders capable of producing reliable VBR files. To this day I still encounter the rare mp3 that incorrectly reports its size in Foobar due to fucky VBR settings. Even a slight issue goes a long way to breaking a 4 hour video. As a rule of thumb stay away from VBR unless you know what you're doing.

The default AAC encoder in OBS is also utter cancer and you need a standalone CoreAAC installer to get a version that doesn't produce youtube puke when provoked.

Audition + Haali Media Splitter May Troll You

I've been quite content to rip audio out of mkvs with audition 1.5 thanks to an unpredictable and somewhat difficult to install configuration which allows it to use Haali Media Splitter to give it such capability. Simply installing the two wasn't quite enough to do the trick, and I loathe to think what hoops I'd have to jump through to get it to work again should I ever need to reinstall windows once more. However, the process is not entirely bulletproof - sometimes they'll hand you sound that is actually desynced. Such was the case with many videos that Nefarius sent me for Castover purposes. Hours of time were sunk into the Rocketeer video when I discovered the game audio, and not the cast itself, was desynced. The gist of it was that I was forced to manually extract the audio with mkvextract.

This rare but persistent issue with specific files leads me to believe it's some upsampling or downsampling that one of the tools is performing silently and evidently very incorrectly.

Dolby ProLogic Is Dangerously Hot Garbage

In your AC3Filter settings is a deceptively lethal piece of diarrhea straight from the Loudness War Initiative, ready to totally fuck your digital audio experience.

I had elusive BSOD's only when watching video for years which were greatly aggravated by replacing the Asus Xonar D2 with a Creative card. Turns out that disabling the Dolby ProLogic garbage in AC3Filter, which had also fucked up my audio tremendously, completely fixed the elusive BSOD's. I had gone for years basing all of my audio configurations on MPC by-the-ear examples when this setting had been destroying them this entire time, and only when I was hunting for the escalating BSOD activity did I discover this nonsense. These settings actually apply a second and aggressive compressor on top of Allah knows what through the video player, things which are both undesirable due to the lack of exposed configurations and entirely unnecessary. Why this setting was on I don't actually know, but I don't recall ever turning it on myself.

Unbelievably, the compressor nature of this setting is allegedly not a bug, unlike (presumably) the bsoding. I only discovered this in 2017, years after I had begun building audio compressors on my extensive listening sessions - with useless and highly fucked up material. Suffice to say I was not happy, as I basically had to re-learn how my compressors were behaving on their own - without the Dolby interference - from scratch, and could no longer trust my experience in the subject.

Vegas is Utter Trash

Vegas is utter trash and it's the only user-friendly UX I've seen in a video editor. Despite all its innumerable problems - some of which I'm about to list off - it's plainly a superior editor and compositor for 99% of the things you could possibly need video editing software for. For the other 1% - Gachi MAD's - there's the cesspool of drivel that is Ass Effects.

But, by God, Sony's going to work your pozzhole to squeeze out the few advantages it has.

Unloading Files is Bad, Don't Do It

If you have the option on to "Unload files when out of focus", Vegas will happily unload them while encoding, because the main window is technically out of focus. This is why many older XXE's and Black Sun: Salvation have random black spots without video - Vegas decided to unload them. With Salvation in particular I spent weeks trying to fix problematic crashes and random, unpredictable black spots in the video, even going as far as reinstalling all codecs and eventually the entire operating system to no avail. I finally caved in and mixed together two semi-functional encodes, masking over black spots with functional parts from previous encodes, using frame-by-frame commands in avisynth.

All of which could have been avoided if I had discovered the truth about this command a little sooner.

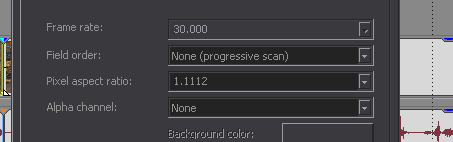

Files Are Timed In Rhymes

For some reason, Vegas's "grid" - the Ruler - is segmented by "Beats" rather than video timestamps like frames or running time in seconds or fractions of a second or some other kind of shit you'd expect to deal with when editing a video. You can change how the information is displayed, but if you want frame accuracy in accessing your videos you need to mess with the Beats by Dr. Dre setting. I simply set it as high as it'll go - as displayed. I'll just assume this system is a result of an intern forgetting to change a labeling option in the middleware Sony uses for the ruler because no other excuse makes a lick of sense. I've certainly never heard of someone editing a video in a time framework of "Beats", and it's definitely not the first thing I think to look for when I want to access specific frames in a video the program just refuses to show me.

Resample Destroys Everything It Touches

By default, Vegas enables something called Resample. Let me show you what it does!

Vegas Resample ON - Vegas Resample OFF

This is default behavior. Vegas default behavior also includes setting your encode quality to Medium or Low, turning on de-interlacing, and setting your FPS and color settings to Analog TV configurations. Fan-fucking-tastic. If you're like 99% of everyone else who uses a video editor - you know, software on a digital platform for digital videos but you don't know the ins and outs of your asshole from your video files you will have literally no idea why your 80,000 bitrate video still looks like it came straight off of Youtube.

Once you've configured your file to not be trapped in the 70's and to not get obliterated by intentionally ghosting it you'll be met with a whole host of wacky bugs, including but not limited to:

- Text input taking up to 30 seconds to buffer each individual character

- Pushing play/pause too fast and causing the buffer to crash and having to restart the program

- Crashing the program from trying to: Scrub the track, push play, pause the video, import a sound file, play a sound file, having sound files of different source sampling rate, using anything other than "Classic Sound Mapper" for audio device on certain hardware, opening the render menu, breathing

- Vegas leaving its IOManager in memory and preventing any future instances of the application from starting to load, giving you an indefinite load bar until you start up procexp and kill the fucking dupe manager program

- Failure to load half the formats the program allows you to import

- Failure to import 90% of standard formats because sony wasn't paid license fees

- Waiting minutes at a time for undoing an action, especially if that action involved deleting anything (they're just references for christ sake it's not like you're fucking encoding a 4k 60fps eroge)

- Waiting 20 seconds or longer for Vegas to buffer a file you're trying to move the track bar past because for some reason an editor with the file in memory is that much slower than just running fucking MPC and tracking it (????????)

- Waiting 5-10 minutes or longer for Vegas to buffer a Lagarith file you tried to track to, especially if you made the foolish mistake of using Camtasia to create it

- Not flushing memory after closing a project

- Not flushing memory after rendering

- Not flushing memory after importing files (reloading uses a fraction of memory and accessing the files is still faster e.g. they're not being buffered, just incompetence)

- Obliterating windows GDI objects at random

- Highly destructive FPS resampling if your source is different than the project settings

- Flakey audio VST effect support

I've used Vegas since ~2006 across a dozen versions and in none of those new versions have I seen problems get fixed, only new ones appear. I trained myself to hit Control+S with literally every single individual action I make because the crashing and buffer bug are so wildly unpredictable and common, even in small projects like cutting up individual pieces of music for D&D.

In a nutshell, video editing is a trial by fire of patience and even across over a decade of using Vegas I continue to run into new and exciting bugs every single time I open it up - almost all of which result in the program straight up crashing or forcing me to restart it. Thankfully this process is relatively quick to do so but still extremely disruptive. As long as you never use anything besides the frameserver, Vegas can produce decent quality 420 files, since it doesn't support lossless 444 formats, suitable for youtube or compilations. However, I have lost months of manhours to its innumerable undocumented bugs and all of my vegas-based productions up to Salvation are utterly destroyed by the resampling behavior.

Sonic 2006 and the Deep Dark Fuck

Schwa began recording Sonic 2006 in 2016, long before we had routed out a variety of nasty gotchas in the typical capture card setup as explained here and here. Furthermore, Schwa was using what I believe was largely third party equipment during the streaming events. Amongst a variety of issues she faced for seemingly inexplicable reasons included audio corruption, audio desyncing, and really messed up video.

The first sessions, including ones that were streamed after the event, had severe aspect ratio fuckery. Instead of the typical 720p that the ps3 uses the videos were 1680x1050. In order to remedy this you had to scale the videos in Vegas appropriately.

It took Drunken Swedes to figure out this bizarre, rarely-used aspect ratio configuration, of which my previous dealings had been during my efforts to rescale the second Hunted LP recordings - a process that had accidentally destroyed the videos and scaled them inappropriately. Woops!

To make matters more difficult, Vegas by default will crush these videos down to 70's analogue TV range. I'm not sure why, but it does. The problem is, they're already crushed - so Vegas was doubling it per Jay Wilson's recommendation. To resolve this I have to apply two duplicate Levels filters.

Sony Vegas 12 is not even capable of displaying text correctly in many places.

I knew it was working when I had to consult third party sources to verify the colors were accurate because the game actually looked worse when expanded back to its color range prior to Schwa's stream butchering it. Evidently, information is lost between this abominable pipeline, but sticking as close to the source as possible is our mantra.

Also worthy of note is that Vegas would change the actual frame positions of these videos when I turned Resampling off, fucking all of my frame-by-frame sequences and forcing me to redo the entire editing of a segment if I forgot to turn it off before working.

Thumbnails Are Digital Cancer

If you have thumbnails turned on in Sony Vegas they will leak forever, regardless of what you do. The project file will simply get bigger and bigger and more bogged down the more you work with it. Even if you delete files and purge the entire track list, it's still hauling around all that guilt of color.

You can't have thumbnails on. They cripple the entire program very quickly. Astonishing it took me like 16 years to discover this insanity, more astonishing is it has yet to be fixed. Wait. That's not astonishing. That's not even surprising. Of course no one fixes basic, fundamental bugs that cripple core functionality of one of the biggest pieces of software in the entire digital industry. No sir. Bug fixes aren't hip. Changing interface colors is hip. Let's do that instead. Fuck ♂ You, Sony.

AAC vs Audition vs Cockney Accents - A Spectral Waveform Tale

Recently, a Drunken Swedish man asked me "Why do people still use mp3? It's so bad!" The answer is, of course, the perfect mixture of laziness and malice. To add insult to injury, as I always must, I informed the Swedish man to check out the spectral waveform of mp3's versus lossless sources. This was to be the Enriched gentleman's first encounter with spectral waveforms, so I needed to give him a quick rundown to make sure the impact of his discovery was every bit as disgusting as it was for me.

You don't even need to know what you're looking at to see that colossal cutoff MP3 has going on at the 20khz range.

Just like this crummy jpeg, mp3 compresses everything it gets its hands on with a sledgehammer, a process which is quite visible in a spectral waveform - a waveform that displays the frequencies of the audio instead of the volume. Pajeet the Youtube CEO might think "Let's just throw some bitrate at it!", but this doesn't fix the problem. The problem is the algorithm used for compression in itself. Hence the whole "lossless" vs "lossy" formats. FLAC also compresses files, but the algorithm it uses to do so is focused on preservation. Suffice to say, studying mp3 under the lampshade of this technology gave Swedish men an enriched hernia.

When distributing content, I aim for high quality. For a time this meant 400-500kbps VBR .ogg. The problem of course was the aforementioned desyncing, especially notable in Dark Souls segment 10. After I discovered this, I switched to AAC. It seemed the most suitable bang for my buck in terms of size for quality.

What is AAC?

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. Designed to be the successor of the MP3 format, AAC generally achieves better sound quality than MP3 at the same bit rate.[3]

...

AAC is the default or standard audio format for YouTube, iPhone, iPod, iPad, ... (Source Wikipedia)

Just reading Wikipedia's header on the format probably makes you cringe. Anything designed in the light of mp3 can't be good. Mp3 is a format that can't be recovered, it needs to be thrown out and forgotten about. If anything, development should be using .wav as a basis. Furthermore, always pay attention to the kinds of people who use something. If it's people associated with a platform known exclusively for pandering censorship, low-quality products, fraud, gotchas and misinformation, as well as devices built by Chinese child labor shops from a corporation famous only for its tax evasion, you need to start asking questions like, "why would I want to use a format associated primarily with firms whom don't give a rat's posterior about product quality?"

The format seemed alright in my myriad productions except for a small niggling that tugged at me every now and then. A niggling that would turn into a noggling when the Swedish Man explored his newfound power of spectral analysis.

The biggest problem with AAC is of course is that when something is designed to replace a shitty format the crowd surrounding it is probably already quite accustomed to shitty formats. Without getting into the most technical details, this is probably the most important part of the wikipedia article.

While the MP3 format has near-universal hardware and software support, primarily because MP3 was the format of choice during the crucial first few years of widespread music file-sharing/distribution over the internet, AAC is a strong contender due to some unwavering industry support.[33]

Keep in mind this is the same industry using an analogue TV range for their colors that was first introduced in the 50's. The same industry that is still using Speex (Opus) for VOIP even though Ventrilo has supported superior quality for years. To say something is "better than mp3 so we should use it" is like saying "this is better than shit so we should eat it".

The main advantages AAC offers over mp3 show up at unlistenably low bitrates, including 128kbps and lower. No one encodes audio at this quality anyways, so it's something we can ignore (AC3 and AAC have a competition in this shit-tier bracket). What's most interesting is how inaccurate AAC is even at extremely high bitrates, an inaccuracy that seems to expand to other hipster formats like mp3 and opus.

Let's look at a quick comparison to get to the root of the weirdness the Swedish man uncovered under the hash stacks and beer bottles.

FLAC (Source) - M4A (AAC) - OGG (Vorbis)

M4A retains more high-range sound than ogg but literally the entire waveform changes. As a format that supposed to excel at low bitrates, why is it so bad at its maximum standard bitrate?

These questions bring us to these bulletpoints on Wikipedia.

- The signal is converted from time-domain to frequency-domain using forward modified discrete cosine transform (MDCT). This is done by using filter banks that take an appropriate number of time samples and convert them to frequency samples.

- The frequency domain signal is quantized based on a psychoacoustic model and encoded.

Psychoacoustics brings us back to x264's compression algorithm and the concept of psychovisuals - the science of removing data in ways that trick the eyes into believing something is more detailed than it actually is. A great case example is looking at still frames of some videos. We fixed a lot of the issues we had with blurryness by feeding psychovisuals a more accurate image when we fixed Limited Range and the horrendous Chroma Subsampling activity default in encoders. But what about audio? Truthfully, this is a very unexplored subject for me.

I can tell you I've always had a niggling about audio sync in longer videos. I've always felt certain productions drifted ever so slightly out of sync, but I've often blamed myself and my own inattention for that. The truth is, of course, my instincts were not wrong. According to tests, Opus, ac3, mp3, AAC and probably more all change the time of the file. If you study the case samples, you'll see it's a drift - it starts synced and drifts out more and more the more time passes. This is also coupled with the fact that almost the entire waveform changes even at a very high bitrate.

It's not a container issue, either. If you placed Opus into the Vorbis container, it still drifts. If you place ogg or flac into any container, such as mka, nothing changes.

Why?

In the context of a handheld device from the 90's, it might make sense to crush your assets to unbearably low sizes if you're still stuck on Canadian technology. The problem is, of course, that compression in itself isn't what's inherently destructive in this example. A good codec has scalability - high quality and low quality. Compression is always a tradeoff, and formats like mp3 and AAC trade off a lot more than just quality on a bitrate setting whereas Opus is entirely unusable at all bitrates. There's a reason why no sane person releases music demos or vocal reels in anything besides wav or FLAC - preservation of their product is the highest priority of any content developer. Low-quality high-compression solutions aren't designed for content developers. Given I've been using AAC at 400-500kbs, which should have been just shy of lossless, the discovery of just how much was changing compared to Vorbis was shocking. The changes aren't as dramatic as my compressor updates or microphone changes, but they all compound each other. There's not much point in spending $450 for an XLR setup if I'm going to be warping the end result and cutting tons of data out in the end pipeline. For pipelines on even more limited hardware the prospect of degrading the result even further should be unbearable.

If the spectral waveform is to be believed, a mere 3 minute sample size results in significant duration alterations. Even a few seconds of voice samples will still yield the drift and inaccuracies. The comparison to ogg, whom at 500kbps only shaves off a bit of audio at the fringe of human hearing, is not one AAC can even hope to compete in. AAC isn't simply compressing the file more aggressively, it's destroying both audio data and time data in all places. It's basically a ghetto King Crimson without the beady eyes and pop music. Even if the difference isn't evident to a lot of viewers, the fact I have been able to notice it in just a few case tests is more than enough for me. The fact AAC changes so much data at a high bitrate setting means it will never produce faithful encodes to source material. AAC is a stillborn format with interesting ideas and no practical use in a pipeline aiming for quality. One question remains, however.

At lower bitrates, ogg will progressively lose more and more high frequency data but always retains duration. So why did my old videos drift out of sync so badly? The answer probably lays in Adobe's plugin for audition. They were already using the worst known AAC implementation - the one shown above is better than all of the productions I've unknowingly released thus far - so why not a fucked up Vorbis plugin, too?

This isn't simply agonizing, it's appalling. This kind of shit never reaches the modern industry of Epic Talkshow Hosts using "Private Discord Servers" to host their "podcasts" over Twitch, so important information never gets propagated anywhere.

Ultimately, the expedition left both of us disgusted, and much moreso than I had really expected.

MKV & Timestamp drifting

Research into the AAC desync and observed inconsistencies in MKV took an even weirder twist. It turns out most if not all audio formats end up with strange inconsistencies when brought into an MKV, inconsistencies that can persist or change further when exported back out of the file.

This seems to have something to do with the way time information and metadata is stored between audio and videos, but the specifics are difficult to scrape up, especially in this context. It's even possible to get ffmpeg to puke DTS errors from exporting files back and forth. But since we aren't merging different file types together or re-encoding any data, why on earth is the data changing at all?

Study of waveforms from files going through mkvs yields what looks to be partial desyncing, sometimes in very specific areas. Normally, this would be associated with incorrect frame data between the video and audio data. But upon review of multi-hour encodes, the timings are precise and don't desync. The changing information is probably related to ffmpeg introducing padding, but why?

This is a curiosity for which there appears to be no answer, or at least none that are easy to find. Without any real information on how or why it's happening, there's no way to determine how much data is actually being changed. Since the data does get changed, though, it means that putting audio into MKV is a lossy (unless using wav or flac, which seems to hand back the correct timestamps) and destructive process. Converting back and forth is definitely ill-advised.

Concat(astrophe) and Desyncing

During the Warcraft 3 run in early 2019, I ran into a gut-wrenching old enemy - Desyncing. Not a gradual desync like those of projects past, but a desync that abruptly interrupted the video between two recordings.

Ultimately, this was discovered to be a result of building an audio file independently of the merged video file using its parts. It turns out that ffmpeg's concat isn't entirely sure what to do with the timestamps on the audio which, due to OBS, are usually longer than the video files. Sometimes, someone in the middle gets confused and you get what I got - 1-2 seconds of mysterious new audio between sittings that fucks half a video. It's an issue I had dodged most of the time in the past because of fraps' reliability that OBS still can't manage to replicate in 2019.

A "solution" is to encode the mixed video files and then rip the audio from that file - this gives the appropriate, synced audio file. It does mean that proposed pipelines in the past all require updating, but this was a given with the extensive volatility of an entirely new setup anyways.

Warcraft 3 also confirmed that OBS still hasn't fixed the i444 bug five years later. Yikes.

Variable Fuck Rates

The odd spell of seemingly low frame rates amidst compilations such as XXE has been a years-long observation, and one typically attributed to any number of offenders you could take a swing at.

- Underdeveloped video pipeline.

- Shitty performance.

- Disk access (especially considering fraps' demand on high motion content).

- Vegas being the piece of shit that it is.

The thing is, due to the inconsistency and rarity of the perceived issues I never had the grounds or desire to pursue a formal investigation, especially when I most often accounted it to hardware or performance problems. But during the Dark Souls XE project, I observed severe fps loss during specific periods that were absolutely not a part of the source recordings. This lead to some hysterical discoveries.

Oy govna', yew got a loicense for dem assault frames?

As it turns out, the brilliant work of ffmpeg and formats like mp4 and m4v is to take a Constant Frame Rate of 30 from a source mkv and default it to a Variable Frame Rate of anywhere from 29.412 to 1,000.000. Given ffmpeg is set to "copy", this basically makes no sense whatsoever.

Research across the internet lead to a myriad of reports about Vegas bugging out on videos with a framerate other than 60, where it would sometimes assign incorrect FPS values to project files invisibly while processing them. Some posts directed to AAC plugins in version 15 as a potential cause. I was neither using AAC nor version 15, but something fucky was definitely afoot.

Something's missing...

I checked some fraps footage from the days of Ricky vs Pupalicks. Mysteriously, there's no actual definition for Constant or Variable framerate, or at least one that's being displayed to Mediainfo. Could it be Vegas is similarly confused?

If Vegas is handed a framerate different than the project, it will drift out of sync. A decimal drift is still a drift and a long enough video will set the whole stack get "offset". As I tested further and further with ffmpeg and my problem souls, I found that the "laggy" spot was restricted to about a minute or so of space around the beginning of the video. Forcing the video to 30fps with -r didn't fix the VFR header but it did move the problem space about 15 seconds ahead.

Although I'm not entirely certain what is happening, it seems two layers of total incompetence are at work. First, ffmpeg is creating files with unrelated and weird metadata. Then, vegas is taking that weird data and assuming it at face value even though the project and files inside the project both claim to be 30fps. Setting the project to 60 and culling to 30 at the encode level doesn't work, it still results in hosed sections of the file.

The net result after days of research and dicking around seems to be you are forced to use -vf to assign a CFR framerate. This, according to my tests so far, seems to eliminate the desyncs in vegas - but it comes at additional, pointless processing costs for something I shouldn't need to do to begin with.

Even worse, along the journey I came across a youtube video in which someone unironically suggested to use Vegas' Resampling.

The Travesty of the World Wide Web and Image Search

Or how Browser Developers are Complete Morons

On the internet, there is exists in recent times a small but disasterously successful push to convert the primary format of images on websites to one of the worst implementations of graphics media ever to exist - WebP. Much like other poorly thought out and badly implemented formats, like mp3 and opus, WebP literally has no reason to exist other than being the brainchild of an intern at a popular but universally reviled corporation whom hopes to rewrite large swaths of data consumed by end users for no reason other than plain malice.

Grossly enough, some users are so wildly ignorant or willfully malicious that they adopt these blafard standards and subject everyone else in their toolchain, production pipeline, or market to also suffer the results of significantly reduced quality. One such example is a band or artist whom only sells their music in the form of mp3, iTunes whom destroys content through 128kbps AAC encodes, or Youtube, Netflix, and all other streaming services whom remove more than half the data from videos before serving you them in a temporary online-only format whom of which do you not technically actually own, unlike physical media. While one would assume anyone with any dignity or common sense would avoid raging trashfires and further obliteration of the value of their time and money, all too often is the case that individuals who use the world wide web embrace their own humiliation and subsequent destruction rather than resist it.

While scouring for media related to projects such as Dungeons & Dragons, you'll be taken to the far corners of the internet, most of which are degenerate. Amongst the worst offenses of degeneracy are platforms that re-encode images into webp, dynamically resize or (or "lazy resize") content, or otherwise reprocess images after someone has uploaded them. In some cases you can simply edit a url or access the source to lift the original image, in other cases, like with Pinterest, the originals usually get indexed by sites like Yandex so you don't need to subject yourself to two atrocious interfaces rather than just one.

At the heap of the trash you'd expect your tools, specifically your web browser, to service you the highest quality version of images available. It just makes sense that when you view images you'd like the least reprocessed, least butchered version available. The fact you have to crawl for those, should they exist, would only be a product of searching the manure left behind by crawlers. Right?

Wrong!

Pale Moon, lauded as being one of the forks for Firefox that supposedly pursues quality, stability and performance over the original, has proven many times its developers are totally clueless if not outright malicious. Today, I was redpilled on just how far their lunacy stretches.

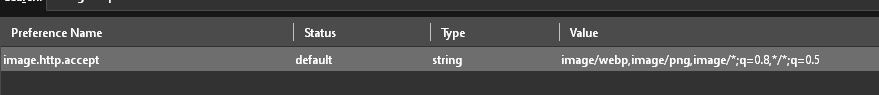

A setting exists in the about:config panel (until it's ultimately removed in favor of hardcoding telemetry and other insanity, a process Firefox and its forks have been steadily working towards completing) called image.http.accept. One can read about this function here, https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Accept , but to summarize the most important part -

In a nutshell, the browser is setting a priority for what type of image it's looking for, should multiple versions be available. It makes common sense that you would always request the highest quality source available - after all, servers and content providers will have already optimized their content for delivery. It also makes sense you'd want to avoid problematic formats like webp that offer no benefits to the end-user but substantially damage the content you are being served.

There is an additional setting that ensures specific results, called q. This ranges from 0.1 to 1, as detailed here - https://restfulapi.net/q-parameter-in-http-accept-header/

Clearly, the only time you'd ever want to be served content at a low quality forcibly is on extremely limited bandwidth, like Canadian mobile plans. On a desktop browser, you'd always want to be forcing the highest quality, asking for pngs when possible. So, you'd want to use a combination of types and q's to force those when possible.

So, what do modern browsers, particularly Pale Moon, do?

Gaze now into the face of despair and know the truth of Man - that all is as evil in the world and deserving only of God's wrath.

That's right. The Pale Moon developers feel that webp is so great that it should be forced upon everyone who ever uses their browser, if possible. The q setting helps ensure that, since the default is 1. The ordering of the list is important - they first prioritize webp, then png. Since no content on the internet is delivered in png except in extremely rare cases when the server holds an original png from an uploader, the image/* filter, which covers jpeg, gets dropped in priority, and you're most likely to get hit by webp in aggregate websites and major image hosts. This is particularly noticeable in Yandex when I am delivered webp constantly even though I am seeing jpeg urls. That's right! Shitty sites like Pinterest actually re-encode jpegs into an even shittier format automatically, and Pale Moon is set to prioritize those re-encodes above their originals.

Pale Moon isn't the only browser encouraging this insanity.

Waterfox also forces webp when it can.

Pale Moon explicitly went out of their way to invisibly make the internet shittier for all of their users. You have to actually go into about:config, hunt this setting down, know how to change it, and what to change it to, to experience many parts of the internet in a manner which the developers of that content actually intended.

This should, at least where accept headers are respected, hand you pngs, otherwise jpegs, in the place of webp filth. Preferably, webp should be entirely and manually excluded from this list, but some sites deliver content exclusively in this shit. Gross!

Changing the about:config is effectively pissing into an ocean. Change happens at the content delivery level - developers implementing webp in any form need to be ostrasized and abandoned before it becomes too widespread to stop. Seeing as no one gives a shit about their videos being annihilated by 50's standards I don't particularly expect anyone to bat an eye at the rest of the internet's carcass getting puked on either.

Webp is just one of many innumerable sins google has shat onto a perfectly functional internet over the last 20 years and people still keep blindly chugging the floaters as memory and cpu costs of everyday websites sky rocket to unbelievable levels while the quality of the content they deliver drops lower and lower.

CDN's Are Cancer

What's worse than having a browser with improperly configured default accept headers feeding you low-quality images? CDN's that servers employ whom cache high-quality images and intentionally serve you low-quality, rescaled versions instead, making vast portions of the internet lower-quality just for the fuck of it. This is such a rampant and widespread process that entire product lines, like kraken.io, are based on it. To make things even worse, these products tend to be written in javascript. This is why platforms like yandex feed you insanely overcompressed and small images most of the time - caching services employed by shitty aggregation websites recompress already shittily compressed jpegs and destroy high-quality content where ever possible.

And, of course, you can't opt out of the grossness, you just have to hope somewhere a source file exists that hasn't been raped by silicuck valley parasites yet.